CI for InstructLab¶

Unit tests¶

Unit tests are designed to test specific InstructLab components or features in isolation. In CI, these tests are run on Python 3.11 and Python 3.10 on CPU-only Ubuntu runners as well as Python 3.11 on CPU-only MacOS runners. Generally, new code should be adding or modifying unit tests.

All unit tests currently live in the tests/ directory and are run with pytest via tox.

Functional tests¶

Functional tests are designed to test InstructLab components or features in tandem, but not necessarily as part of a complex workflow. In CI, these tests are run on Python 3.11 and Python 3.10 on CPU-only Ubuntu runners as well as Python 3.11 on CPU-only MacOS runners. New code may or may not need a functional test but should strive to implement one if possible.

The functional test script is Shell-based and can be found at scripts/functional-tests.sh.

End-to-end (E2E) tests¶

There are two E2E test scripts.

The first can be found at scripts/e2e-ci.sh. This script is designed to test the InstructLab workflow from end-to-end, mimicking real user behavior,

across three different “t-shirt sizes” of systems. Most E2E CI jobs use this script.

The second can be found at scripts/e2e-custom.sh. This script takes arguments that control which features are used to allow

varying test coverage based on the resources available on a given test runner. The

“custom” E2E CI job uses this script,

though you can specify to use another script such as e2e-ci.sh if you want to test changes to a different code path that doesn’t automatically run

against a pull request.

There is currently a “small” t-shirt size E2E job and a

“medium” t-shirt size E2E job.

These jobs runs automatically on all PRs and after commits merge to main or release branches. They depend upon the successful completion of any linting type jobs.

There is also a “large” t-shirt size E2E job that can be

triggered manually on the actions page for the repository.

It also runs automatically against the main branch at 11AM UTC every day.

E2E Test Coverage Options¶

You can specify the following flags to test the small, medium, and large t-shirt sizes with the e2e-ci.sh

script.

Flag |

Feature |

|---|---|

|

Run the e2e workflow for the small t-shirt size of hardware |

|

Run the e2e workflow for the medium t-shirt size of hardware |

|

Run the e2e workflow for the large t-shirt size of hardware |

|

Run the e2e workflow for the x-large t-shirt size of hardware |

|

Preserve the E2E_TEST_DIR for debugging |

You can specify the following flags to test various features of ilab with the

e2e-custom.sh script.

Flag |

Feature |

|---|---|

|

Run model evaluation |

|

Run “fullsize” SDG |

|

Run the ‘simple’ training pipeline with 4 bit quantization |

|

Run the ‘simple’ training pipeline |

|

Use the ‘full’ training pipeline optimized for CPU and MPS rather than simple training |

|

Use the ‘accelerated’ training library rather than simple or full training |

|

Run minimal configuration (lower number of instructions and training epochs) |

|

Use Mixtral model (4-bit quantized) instead of Merlinite (4-bit quantized) |

|

Use the phased training within the ‘full’ training library |

|

Run with vLLM for serving |

Current E2E Jobs¶

Name |

T-Shirt Size |

Runner Host |

Instance Type |

OS |

GPU Type |

Script |

Flags |

Runs when? |

Slack/Discord reporting? |

|---|---|---|---|---|---|---|---|---|---|

Small |

AWS |

CentOS Stream 9 |

1 x NVIDIA Tesla T4 w/ 16 GB VRAM |

|

|

Pull Requests, Push to |

No |

||

Medium |

AWS |

CentOS Stream 9 |

1 x NVIDIA L4 w/ 24 GB VRAM |

|

|

Pull Requests, Push to |

No |

||

Large |

AWS |

CentOS Stream 9 |

4 x NVIDIA L40S w/ 48 GB VRAM (192 GB) |

|

|

Manually by Maintainers, Automatically against |

Yes |

||

X-Large |

AWS |

CentOS Stream 9 |

8 x NVIDIA L40S w/ 192 GB VRAM (384 GB) |

|

|

Manually by Maintainers, Automatically against |

Yes |

E2E Test Coverage Matrix¶

Area |

Feature |

||||

|---|---|---|---|---|---|

Serving |

llama-cpp |

✅ |

✅ |

⎯ |

⎯ |

vllm |

⎯ |

✅ |

✅ |

✅ |

|

Generate |

simple |

✅ |

⎯ |

⎯ |

⎯ |

full |

⎯ |

✅ |

✅ |

✅ |

|

Training |

simple |

✅(*1) |

⎯ |

⎯ |

⎯ |

full |

⎯ |

✅ |

⎯ |

⎯ |

|

accelerated (multi-phase) |

⎯ |

⎯ |

✅ |

✅ |

|

Eval |

eval |

⎯ |

✅(*2) |

✅ |

✅ |

Points of clarification (*):

The

simpletraining pipeline uses 4-bit-quantization. We cannot use the trained model here due to #579MMLU Branchis not run as thefullSDG pipeline does not create the needed files in the tasks directory when only training against a skill.

Discord/Slack reporting¶

Some E2E jobs send their results to the channel #e2e-ci-results via the Son of Jeeves bot in both Discord and Slack. You can see which jobs currently have reporting via the “Current E2E Jobs” table above.

In Slack, this has been implemented via the official Slack GitHub Action. In Discord, we use actions/actions-status-discord and the built-in channel webhooks feature.

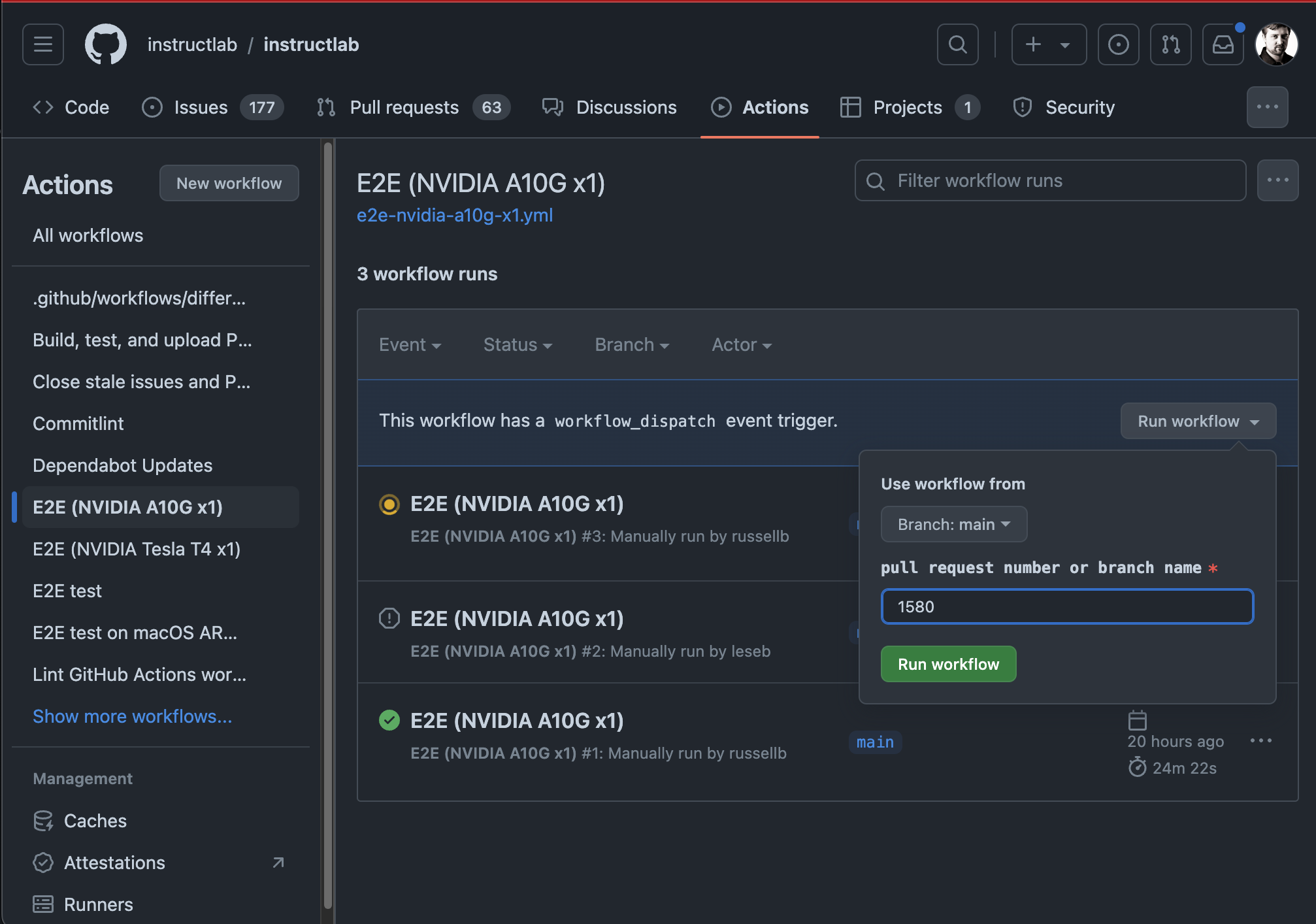

Triggering an E2E job via GitHub Web UI¶

For the E2E jobs that can be launched manually, they take an input field that specifies the PR number or git branch to run them against. If you run them against a PR, they will automatically post a comment to the PR when the tests begin and end so it’s easier for those involved in the PR to follow the results.

Visit the Actions tab.

Click on one of the E2E workflows on the left side of the page.

Click on the

Run workflowbutton on the right side of the page.Enter a branch name or a PR number in the input field.

Click the green

Run workflowbutton.

Here is an example of using the GitHub Web UI to launch an E2E workflow: